“…nothing can be said to be certain, except death, taxes and hard drive failure” Benjamin Franklin, 1789.

Call me old fashioned, but I tend to still think that the folk who know the capabilities of any product best (and how long it will last), are the people who manufacture them. So as an exercise for the reader:

Why do you think that most Hard Drive Manufacturers provide only 1 year warranties with their drives?

The Problem

With this in mind, I started to investigate the current data retention technologies available for increasing the storage in my HP ProLiant MicroServer (you know, the one everyone bought after HP did a cash back deal – because it seemed like a cheap and good idea at the time).

I wanted to build a resilient repository for family photos and and the like – the sort of stuff that is spilling out of USB drives, memory sticks and getting deleted from digital cameras when they run out of space.

I would like to say that I did the investigation bit first, but having leapt at an offer for two 3TB Toshiba DT01ACA300 drives – I ended up scratching my head as to precisely what to do with them when they arrived …

Given the certainty that both of these drives will fail at some point in the future – at least, before I am no longer capable of operating a computer, I figured that unless there was some common and interrupting event, like a plane falling on the house or a massive power surge – at least these drives are unlikely to both fail at the same time. Therefore if each drive was a duplicate of the other, I reckoned that I had a pretty good chance of maintaining a resilient data storage environment in which I could swap out drives when they failed.

The Woody Plan

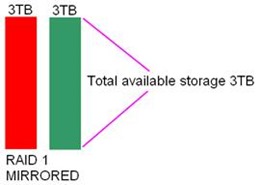

My plan, was to use the RAID controller in the HP micro server to do RAID 1 mirroring – i.e. each disk would be a mirror of the other, with files being written (and read) from both disks. However, once I had sat down and thought about it, I realised that it was quite an inelegant approach and not very cost effective. For although I would continue to have a complete copy of the data if one disk failed, that data would be a mixture of stuff which could effectively have different retention policies – not all of which, needed the same level of resilience.

For simplicity, let’s say that I have just two data retention types (although you could have significantly more in your world):

1. Family photos and the like – which need to be retained for the sake of marital harmony..

2. Music MP3’s (rips of CD’s that I own and can be re-ripped), iPlayer downloads, Mrs W’s PDF’s of Next catalogues …etc, etc. This is stuff, which while I am in no hurry to lose, can be recreated and doesn’t need to sit on a high cost storage array.

Note how, with my proposed two disk mirrored array, I have to provide 6TB of drive space to deliver only 3TB of storage – and a lot of that storage will actually be used up by data that I don’t care if it is lost (like the Next Catalogues).

I guess that you began to read this article in the traditional way by starting at the title … so it will come as no surprise to you that as a result of my research for my own storage options, I became intrigued by the capabilities presented by a technology called Storage Spaces – that is built into the newly released R2 version of Windows Server 2012 (there was a version of Storage Spaces in R1 – but R2 adds some significant additional features).

This is not just for large enterprises

While Windows Server 2012 is generally held to be the preserve of large enterprises, now with the launch of the R2 release, entry level can be gained via the Foundation edition (up to 15 users running on single processor servers) which will only be available OEM pre-installed on new servers (like the HP Micro Server) for an uplift – which current manufacturers retail pricing is indicating to be around £160/$260. OK, so it’s an additional cost, but you have to buy an operating system to put on your new micro server in any case – right?

Of course, there are other versions of Windows Server 2012 R2 (see references at the end) which contain the same Storage Spaces technology and there are products that can simulate some of the capabilities of Storage Spaces in Windows 7 and 8.1 (which I will cover in a later article).

…And just to cover something else off – for those who are wondering why I appear to be endorsing an en-premise approach to storage management in the era of the cloud: There continue to be many IT Managers for whom the provision of en-premise centralised backup, file and print management is still a key service.

Smart Data Ubiquity (SDU) – what is that?

SDU in Windows Server 2012 R2, is the result of a complete paradigm shift in the way we think of provisioning storage:

Imagine building a server which, for simplicity, contains just a bunch of disks (JBOD) [although the technology works just as well with NAS, Fibre attached, clustered bare metal or virtual servers] which can be a mixture of sizes and technologies – traditional platter and SSD’s].

A number of these disks are then effectively ring-fenced as a Storage Pool (there can be multiple Storage Pools).

Virtual drives – called Storage Spaces – are then defined and mounted as if they are physical drives.

Each Storage Space automatically configures using (but not reserving – as we’ll see later) available space from any of the physical drives in the Storage Pool:

Data written to each Storage Space is then striped across the drives in the storage pool in 256K blocks (block size is adjustable – for optimising block write to match the block size of a tenant application for which you have reserved a dedicated Storage Space).

The Data bit

There are three types of Storage Space, two of which are resilient by design:

Simple: Data is simply striped across drives in the storage pool. No parity bit is stored, neither is the data mirrored or otherwise duplicated. This type is best suited for ephemeral data – that which no longer needs to exist after an application has closed or for elastic compute environments etc. Don’t back up your photos on this one …but actually, as we’ll see later, a Simple Storage Space is more resilient than a stand-alone drive in situations where disk degradation occurs (but it won’t protect against sudden/catastrophic disk failure).

Parity: Data and associated parity bits are striped across physical disks in the storage pool, but it does need at least 3 physical drives to protect you from one physical disk failure and 7 drives to protect from 2 disk failures etc. So I guess you get better protection from catastrophic disk failure at the cost of reduced usable disk space overall.

Mirror: Data is striped in duplicate data blocks across different physical disks in the storage pool. Again, while you suffer some reduction in overall capacity, it takes 3 physical drives to protect you from one physical disk failure and only 5 drives to protect from 2 disk failures etc. In addition, Mirror Storage Spaces support three way mirroring (a copy of a copy of a copy – for the truly paranoid) – so I am guessing that when building large Storage Spaces, Mirroring might be the most popular approach, as data from a lost physical drive can simply be block copied from it’s mirror – rather than being recreated from it’s parity data – which can be potentially more processor and Bus intensive.

The Smart bit

Storage Spaces also have additional inherent capabilities:

Thin Provisioning: This one is a bit mind bending, but it is the ability to provision a Storage Space at a size that if all Storage Spaces were filled to capacity, the sum of the total capacity of all Storage Spaces would be larger than the total capacity of physical disk space in the the Storage pool. As an example, if the total physical capacity of all the drives in the pool was 10TB, you could provision 15TB’s (the sky’s the limit) of total Storage Space capacity as long as the actual consumption of space in all the Storage Spaces never exceeded 10TB (you would get an error and a lot of phones ringing if it did …).

Most traditional storage systems run significantly under capacity – as disk partitions generally tend to be specified for the maximum amount of data that users and applications are going to throw at them (plus, say, 20% – if you want to keep your job when something goes wrong). Even if you say that any partition runs, on average, at 70% utilisation – this means, that at any moment in time, there is a lot of disk space sitting idle.

With thin provisioning, you can effectively size Storage Spaces to the same capacity as a traditional sizing model, but on average, with Storage Spaces transient data cycling between max and min consumption, it means that more users/apps can inhabit the same physical drive space. Oh, and the best bit – if you start to run out of space, just bung another drive into the pool!

Storage Tiers: As you initially provision or add drives to the Storage Pool, you must specify the tier of each drive that is added. Currently there are two tiers:

– SSD’s

– HD’s

Over time, as data is written and read from a Storage Space, it is identified as high or low frequency accessed data. High frequency or “hot” data will automatically and dynamically be moved to the SSD’s in the storage pool and vice versa, with the lowest frequency accessed data being moved to HD’s.

Write cache: (AKA Write-back cache): Each Storage Space, as long as it contains a physical number of SSD’s appropriate to its type (Simple, Parity or Mirror) is automatically provisioned with a 1GB write cache. This allows the initial buffering of either the types of random writes common in enterprise applications or high density streaming writes. Data is then background striped across the Storage Space – smoothing jitter caused by data write bottlenecks.

The ubiquitous bit

This, to me, is the most exciting and coolest resilience bit – which kind of ties it all together:

Storage Pools (of physical disks) automatically self heal. If a drive is detected as failing or otherwise degrading, it’s data will automatically be moved to free space on the other physical drives in the Storage Pool.

Storage Pools can also contain a pre-designated Hot Spare Drive which a Storage Space will then use first for healing – in preference to free space on the other drives.

Similarly, additional physical drives can be added to a Storage Pool at any time and the Storage Spaces will rebalance.

There’s more ….

This is but a taster to start you out on the Windows Server 2012 R2 SDU journey.

There is so much more – that I have left out for simplicity – like how you configure and provision Storage Spaces with the Server console or PowerShell commandlets. How you manage TRIM and Defrag and the time and frequency of high frequency data being optimised to SSD and how you manage fail over clustering and multitenancy etc.

Continue the Smart Data Ubiquity journey here:

http://social.technet.microsoft.com/wiki/contents/articles/15198.storage-spaces-overview.aspx

© Woody – October 2013

[This article is original work – over which I own and retain copyright. However, it is based on multiple Microsoft documentary sources placed in the public domain and I acknowledge and thank Microsoft for that. You are welcome to use and otherwise republish this article as you will – but I just request that you continue to attribute me as the original author. Thanks.]

I’ve been thinking about playing with storage space for a while now, think I’m going to have to give this a go for my home server replacement.